Publications

-

LatteCLIP: Unsupervised CLIP Fine-Tuning via LMM-Synthetic TextsAnh-Quan Cao, Maximilian Jaritz, Matthieu Guillaumin, Raoul de Charette, Loris BazzaniarXiv 2024

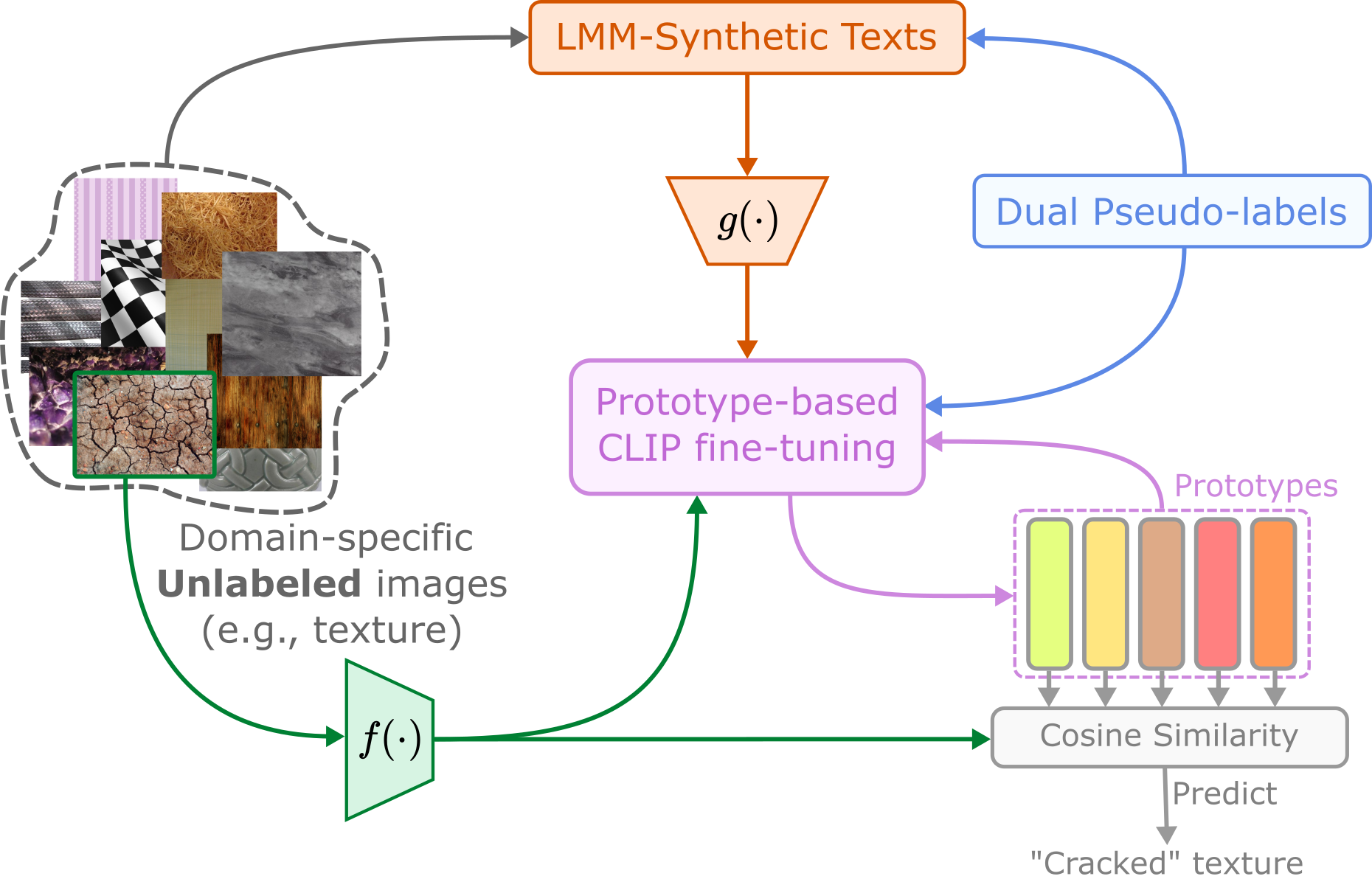

LatteCLIP: Unsupervised CLIP Fine-Tuning via LMM-Synthetic TextsAnh-Quan Cao, Maximilian Jaritz, Matthieu Guillaumin, Raoul de Charette, Loris BazzaniarXiv 2024Large-scale vision-language pre-trained (VLP) models (e.g., CLIP) are renowned for their versatility, as they can be applied to diverse applications in a zero-shot setup. However, when these models are used in specific domains, their performance often falls short due to domain gaps or the under-representation of these domains in the training data. While fine-tuning VLP models on custom datasets with human-annotated labels can address this issue, annotating even a small-scale dataset (e.g., 100k samples) can be an expensive endeavor, often requiring expert annotators if the task is complex. To address these challenges, we propose LatteCLIP, an unsupervised method to fine-tune CLIP models for custom domains without relying on human annotations. Our method leverages Large Multimodal Models (LMMs) to generate expressive textual descriptions for both individual images and groups of images. These provide additional contextual information to guide the fine-tuning process in the custom domains. Since LMM-generated descriptions are prone to hallucination or missing details, we introduce a novel strategy to distill only the useful information and stabilize the training. Specifically, we learn per-class prototype representations, that combine noisy generated texts and pseudo-labels in a single vector. Our experiments on 10 domain-specific datasets show that LATTECLIP outperforms pre-trained zero-shot methods by an average improvement of +4.74 points in top-1 accuracy and other state-of-the-art unsupervised methods by +3.45 points.

@inproceedings{cao2024latteclip, title = {LatteCLIP: Unsupervised CLIP Fine-Tuning via LMM-Synthetic Texts}, authors = {Anh-Quan Cao, Maximilian Jaritz, Matthieu Guillaumin, Raoul de Charette, Loris Bazzani}, booktitle = {arXiv}, year = {2024}, } - PaSCo: Urban 3D Panoptic Scene Completion with Uncertainty AwarenessAnh-Quan Cao, Angela Dai, Raoul de CharetteCVPR 2024Oral, Best Paper Award Candidate.

We propose the task of Panoptic Scene Completion (PSC) which extends the recently popular Semantic Scene Completion (SSC) task with instance-level information to produce a richer understanding of the 3D scene. Our PSC proposal utilizes a hybrid mask-based technique on the non-empty voxels from sparse multi-scale completions. Whereas the SSC literature overlooks uncertainty which is critical for robotics applications, we instead propose an efficient ensembling to estimate both voxel-wise and instance-wise uncertainties along PSC. This is achieved by building on a multi-input multi-output (MIMO) strategy, while improving performance and yielding better uncertainty for little additional compute. Additionally, we introduce a technique to aggregate permutation-invariant mask predictions. Our experiments demonstrate that our method surpasses all baselines in both Panoptic Scene Completion and uncertainty estimation on three large-scale autonomous driving datasets.

@inproceedings{cao2024pasco, title = {PaSCo: Urban 3D Panoptic Scene Completion with Uncertainty Awareness}, authors = {Anh-Quan Cao, Angela Dai, Raoul de Charette}, booktitle = {CVPR}, year = {2024}, oral_pasco = true }

-

SceneRF: Self-Supervised Monocular 3D Scene Reconstruction with Radiance FieldsAnh-Quan Cao, Raoul de CharetteICCV 2023

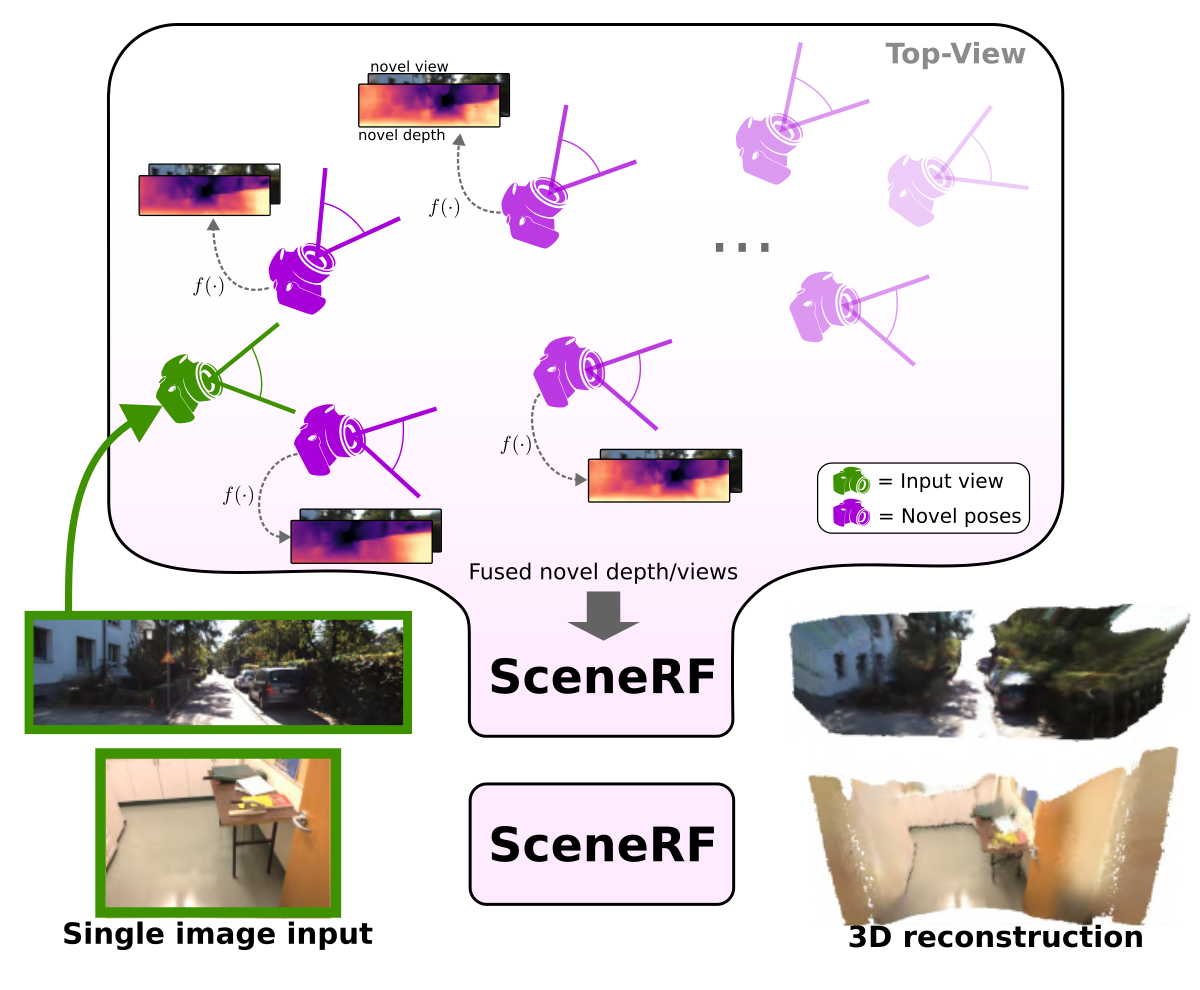

SceneRF: Self-Supervised Monocular 3D Scene Reconstruction with Radiance FieldsAnh-Quan Cao, Raoul de CharetteICCV 2023In the literature, 3D reconstruction from 2D image has been extensively addressed but often still requires geometrical supervision. In this paper, we propose a self-supervised monocular scene reconstruction method with neural radiance fields (NeRF) learned from multiple image sequences with pose. To improve geometry prediction, we introduce new geometry constraints and a novel probabilistic sampling strategy that efficiently update radiance fields. As the latter are conditioned on a single frame, scene reconstruction is achieved from the fusion of multiple synthetized novel depth views. This is enabled by our spherical-decoder which allows hallucination beyond the input frame field of view. Thorough experiments demonstrate that we outperform all baselines on all metrics for novel depth views synthesis and scene reconstruction.

@inproceedings{cao2022scenerf, title = {SceneRF: Self-Supervised Monocular 3D Scene Reconstruction with Radiance Fields}, authors = {Anh-Quan Cao, Raoul de Charette}, booktitle = {ICCV}, year = {2023}, }

-

COARSE3D: Class-Prototypes for Contrastive Learning in Weakly-Supervised 3D Point Cloud SegmentationRong Li, Anh-Quan Cao, Raoul de CharetteBMVC 2022

COARSE3D: Class-Prototypes for Contrastive Learning in Weakly-Supervised 3D Point Cloud SegmentationRong Li, Anh-Quan Cao, Raoul de CharetteBMVC 2022Annotation of large-scale 3D data is notoriously cumbersome and costly. As an alternative, weakly-supervised learning alleviates such a need by reducing the annotation by several order of magnitudes. We propose a novel architecture-agnostic contrastive learning strategy for 3D segmentation. Since contrastive learning requires rich and diverse examples as keys and anchors, we propose a prototype memory bank capturing class-wise global dataset information efficiently into a small number of prototypes acting as keys. An entropy-driven sampling technique then allows us to select good pixels from predictions as anchors. Experiments using a light-weight projection-based backbone show we outperform baselines on three challenging real-world outdoor datasets, working with as low as 0.001% annotations.

@inproceedings{rong2022coarse3d, title = {COARSE3D: Class-Prototypes for Contrastive Learning in Weakly-Supervised 3D Point Cloud Segmentation}, authors = {Rong Li, Anh-Quan Cao, Raoul de Charette}, booktitle = {BMVC}, year = {2022}, } -

MonoScene: Monocular 3D Semantic Scene CompletionAnh-Quan Cao, Raoul de CharetteCVPR 2022

MonoScene: Monocular 3D Semantic Scene CompletionAnh-Quan Cao, Raoul de CharetteCVPR 2022MonoScene proposes a 3D Semantic Scene Completion (SSC) framework, where the dense geometry and semantics of a scene are inferred from a single monocular RGB image. Different from the SSC literature, relying on 2.5 or 3D input, we solve the complex problem of 2D to 3D scene reconstruction while jointly inferring its semantics. Our framework relies on successive 2D and 3D UNets bridged by a novel 2D-3D features projection inspiring from optics and introduces a 3D context relation prior to enforce spatio-semantic consistency. Along with architectural contributions, we introduce novel global scene and local frustums losses. Experiments show we outperform the literature on all metrics and datasets while hallucinating plausible scenery even beyond the camera field of view. Our code and trained models are available at https://astra-vision.github.io/MonoScene/.

@inproceedings{cao2022monoscene, title = {MonoScene: Monocular 3D Semantic Scene Completion}, authors = {Anh-Quan Cao, Raoul de Charette}, booktitle = {CVPR}, year = {2022}, }

-

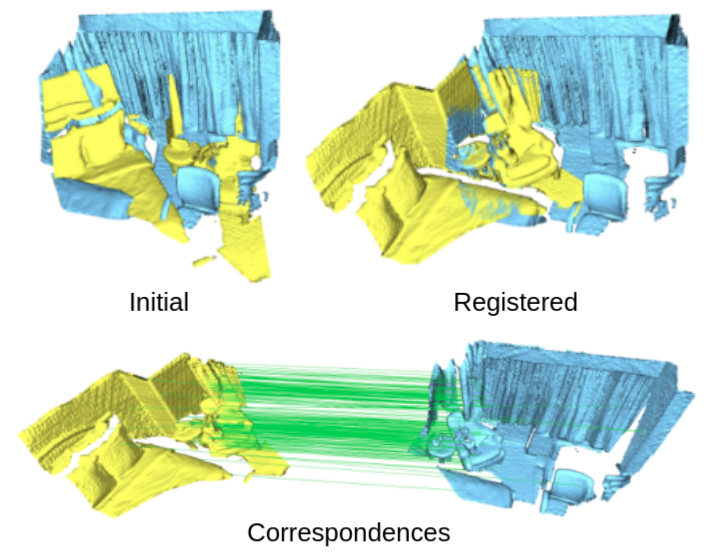

PCAM: Product of Cross-Attention Matrices for Rigid Registration of Point CloudsAnh-Quan Cao, Gilles Puy, Alexandre Boulch, Renaud MarletICCV 2021

PCAM: Product of Cross-Attention Matrices for Rigid Registration of Point CloudsAnh-Quan Cao, Gilles Puy, Alexandre Boulch, Renaud MarletICCV 2021Rigid registration of point clouds with partial overlaps is a longstanding problem usually solved in two steps: (a) finding correspondences between the point clouds; (b) filtering these correspondences to keep only the most reliable ones to estimate the transformation. Recently, several deep nets have been proposed to solve these steps jointly. We built upon these works and propose PCAM: a neural network whose key element is a pointwise product of cross-attention matrices that permits to mix both low-level geometric and high-level contextual information to find point correspondences. A second key element is the exchange of information between the point clouds at each layer, allowing the network to exploit context information from both point clouds to find the best matching point within the overlapping regions. The experiments show that PCAM achieves state-of-the-art results among methods which, like us, solve steps (a) and (b) jointly via deepnets.

@inproceedings{cao21pcam, title = {PCAM: Product of Cross-Attention Matrices for Rigid Registration of Point Clouds}, authors = {Anh-Quan Cao, Gilles Puy, Alexandre Boulch, Renaud Marlet}, booktitle = {ICCV}, year = {2021}, }